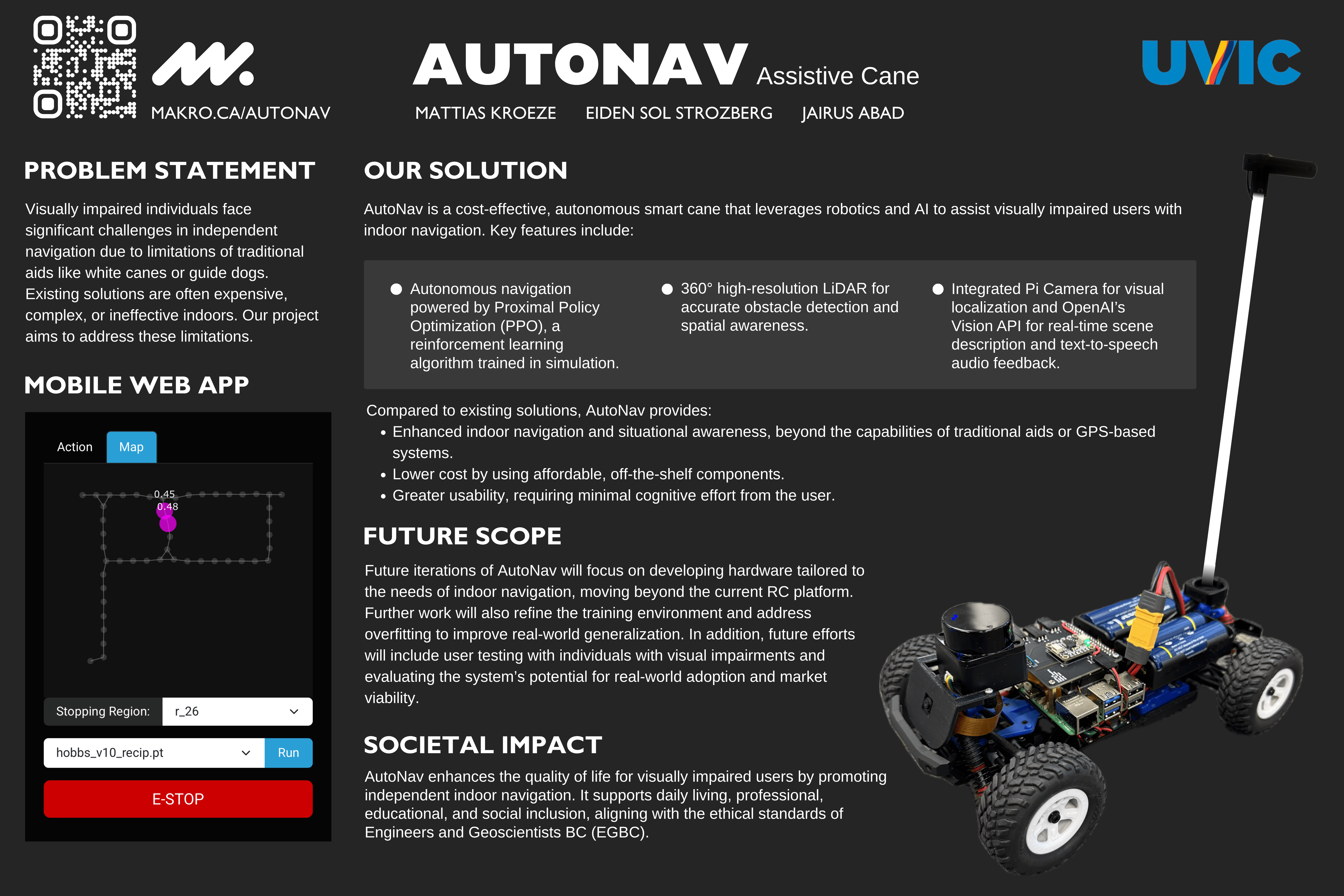

AutoNav Assistive Cane

READ THE FULL PAPER HERE

Acknowledgements

We would like to thank Dr. Papadopoulos for his guidance during the planning and analysis stages of this project. His real-world engineering insights were instrumental in shaping our approach.

We also gratefully acknowledge Professor Sana, the teaching assistants, and the Department of Electrical and Computer Engineering at the University of Victoria for their instruction and for organizing the Capstone Demo Competition.

Additional thanks to our families and friends for their encouragement throughout this project.

Problem Statement

Navigating indoor environments independently remains a major challenge for individuals with visual impairments. Traditional aids like white canes or guide dogs offer limited support, they require memorizing layouts and provide little to no autonomous guidance. GPS is unreliable indoors, and most advanced mobility solutions are either prohibitively expensive or not user-friendly. We wanted to create a tool that could help people navigate familiar indoor spaces - like schools, offices, and homes - with confidence, safety, and autonomy.

Project Overview

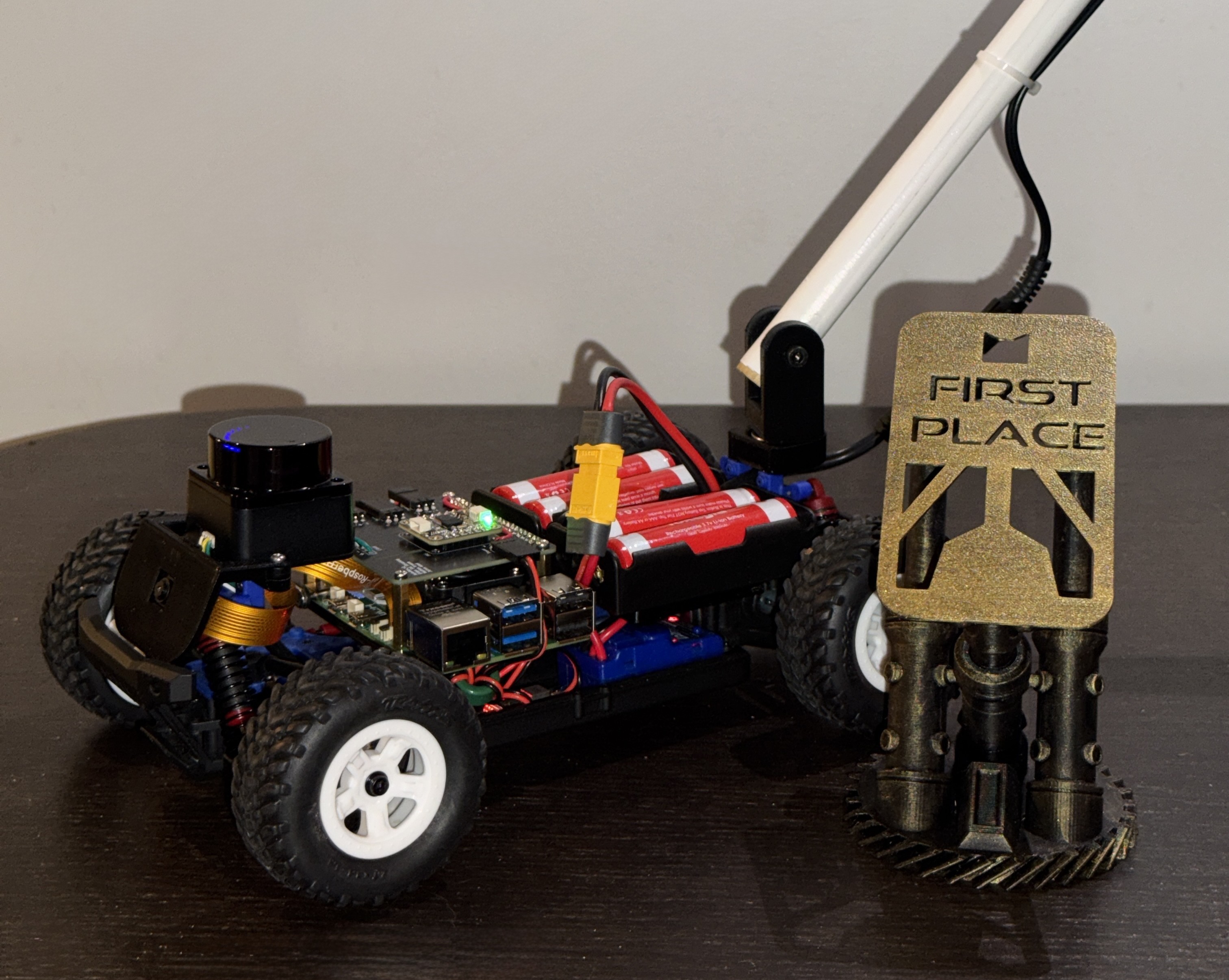

AutoNav is a low-cost, autonomous smart cane designed to assist visually impaired individuals with indoor navigation. It uses a Raspberry Pi 5 mounted to a 1/18-scale RC car equipped with a 360° LiDAR sensor, IMU, and Pi Camera. The system was trained using a Proximal Policy Optimization (PPO) reinforcement learning model in Isaac Sim, enabling it to follow hallways and avoid obstacles in real time. The cane also includes a scene description feature powered by OpenAI’s Vision and TTS APIs, triggered by a secondary button.

Platform Design

The platform uses off-the-shelf hardware to keep costs low. Major components include the LaTrax Prerunner RC car, Raspberry Pi 5 (8GB), LD19 LiDAR, BNO085 IMU, and a custom breakout PCB. Power is provided by a 2S2P 18650 lithium-ion pack and UBEC regulator, allowing for roughly 2-4 hours of runtime. A custom 3D-printed chassis and ergonomic cane handle were designed to mount and interface with all hardware securely and comfortably.

Simulation and Model Training

The PPO model was trained in Isaac Sim using a 1:1 digital twin of the third floor of UVic’s Engineering Lab Wing (ELW), reconstructed via LiDAR scans from an iPhone 15 Pro. The simulated environment was divided into 49 labeled regions and the model was trained to navigate any point-to-point route determined via an A* algorithm.

Localization and Scene Description

To support navigation in perceptually aliased environments, a Visual Place Recognition (VPR) system was implemented using EfficientNet-B0. It compares camera embeddings against a database of labeled regions. When the user-defined destination is detected with high confidence, the robot stops. The secondary cane trigger activates a camera capture, which is sent to OpenAI’s image captioning API. The returned description is converted to speech and played back to the user through a web interface.

Results and Testing

The final prototype was tested in known and unknown indoor environments, including multiple buildings on the UVic campus, and in some outdoor areas. While the full pathfinding algorithm struggles to effectively transfer from simulation to hardware, a fallback generalized model showed strong performance in hallway following and obstacle avoidance. The system showed a bias toward right turns due to training data imbalance.

Cost and Recognition

Total direct hardware costs were $801.14 CAD. The project received $495 in funding and was awarded 1st place in the ECE 499 Capstone Competition with a perfect score. Judges cited the technical completeness and practical impact of the solution.

References

- Raspberry Pi 5 – Raspberry Pi Foundation

- DTOF LD19 LiDAR – Waveshare Wiki

- BNO085 IMU – Adafruit 9-DOF Orientation Breakout

- Getting Started with Pi Camera – Raspberry Pi Projects

- OpenAI Vision & TTS API – OpenAI Documentation

- Engineers and Geoscientists BC – Code of Ethics

- Proximal Policy Optimization (PPO) – SKRL Documentation

- EfficientNet: Rethinking Model Scaling – Tan & Le, arXiv

- LaTrax Desert Prerunner – Traxxas

- Isaac Gym – NVIDIA Developer

Need a problem solver?

Contact me with any opportunities at mattias.kro@gmail.com or reach me on LinkedIn

Grab a resume